Gender recognition using a gaze-guided self-attention mechanism robust against background bias in training samples

[Abstract]

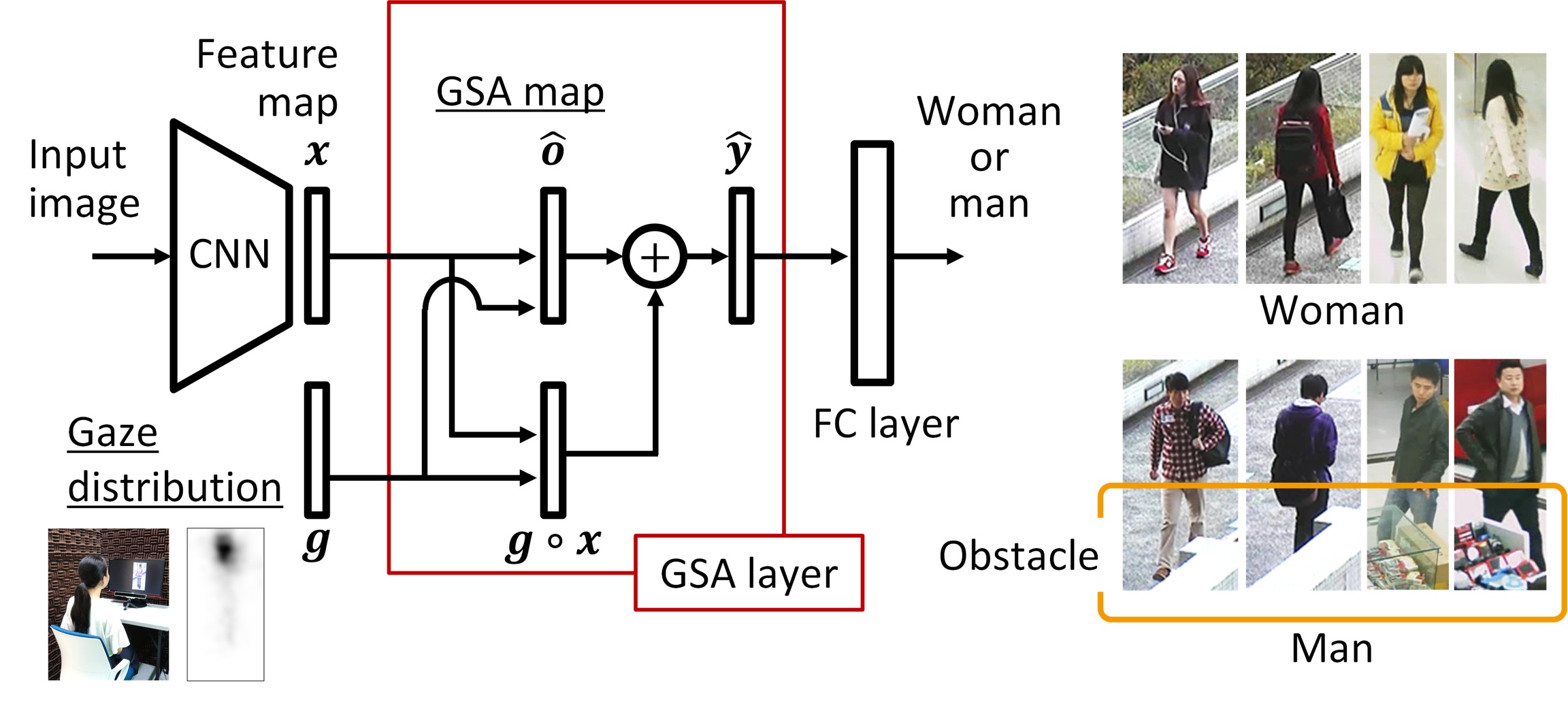

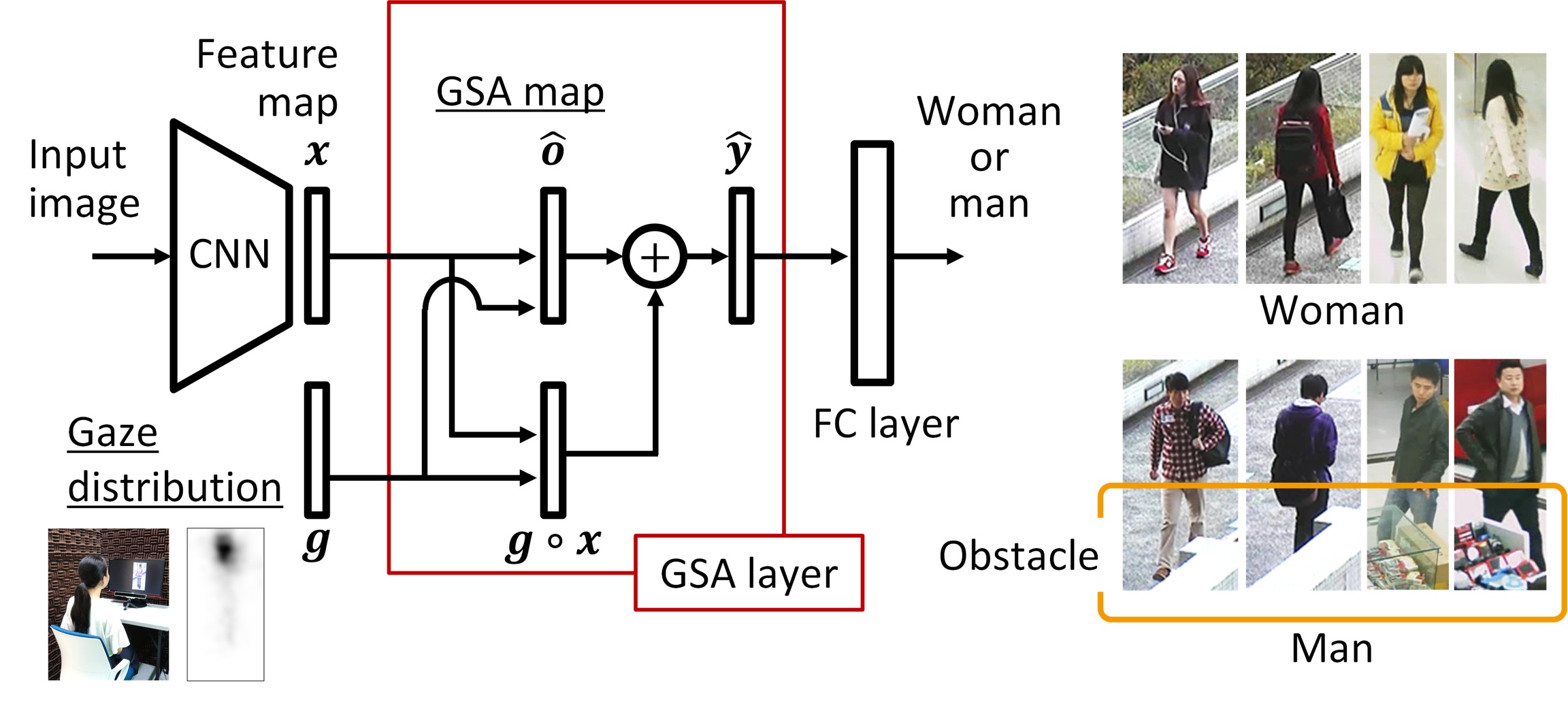

We propose an attention mechanism in deep learning networks for gender recognition using the gaze distribution of human observers when they judge the gender of people in pedestrian images.

Prevalent attention mechanisms spatially compute the correlation among values of all cells in an input feature map to calculate attention weights.

If a large bias in the background of pedestrian images (e.g., test samples and training samples containing different backgrounds) is present, the attention weights learned using the prevalent attention mechanisms are affected by the bias, which in turn reduces the accuracy of gender recognition.

To avoid this problem, we incorporate an attention mechanism called gaze-guided self-attention (GSA) that is inspired by human visual attention.

Our method assigns spatially suitable attention weights to each input feature map using the gaze distribution of human observers.

In particular, GSA yields promising results even when using training samples with the background bias.

The results of experiments on publicly available datasets confirm that our GSA, using the gaze distribution, is more accurate in gender recognition than currently available attention-based methods in the case of background bias between training and test samples.

[Publications]