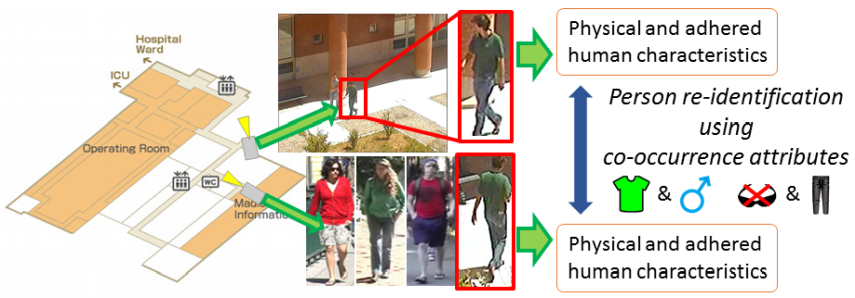

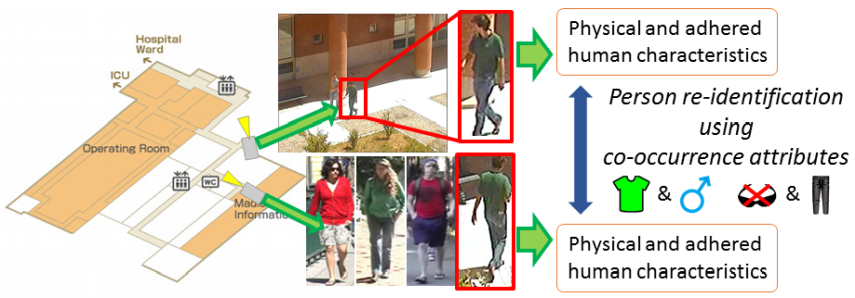

Person Re-identification using Co-occurrence Attributes of Physical and Adhered Human Characteristics

[Abstract]

We propose a novel method for extracting features from images of people using co-occurrence attributes, which are then used for person re-identification. Existing methods extract features based on simple attributes such as gender, age, hair style, or clothing. Our method instead extracts more informative features using co-occurrence attributes, which are combinations of physical and adhered human characteristics (e.g., a man wearing a suit, 20-something woman, or long hair and wearing a skirt). Our co-occurrence attributes were designed using prior knowledge of methods used by public websites that search for people. Our method first trains co-occurrence attribute classifiers. Given an input image of a person, we generate a feature by vectorizing confidences estimated using the classifiers and compute a distance between input and reference vectors with a metric learning technique. Our experiments using a number of publicly available datasets show that our method substantially improved the matching performance of the person re-identification results, when compared with existing methods. We also demonstrated how to analyze the most important co-occurrence attributes.

[Publication]

[Publication (Japanese) ]

- 中野 翔太, 四元 辰平, 吉村 宏紀, 西山 正志, 岩井 儀雄, 菅原 一孔,

身体と外見の共起属性を用いた人物対応付け,

電子情報通信学会論文誌 D, Vol.J100-D, No.1, pp.104-114, January 2017.