Comparing the Recognition Accuracy of Humans and Deep Learning on a Simple Visual Inspection Task

[Abstract]

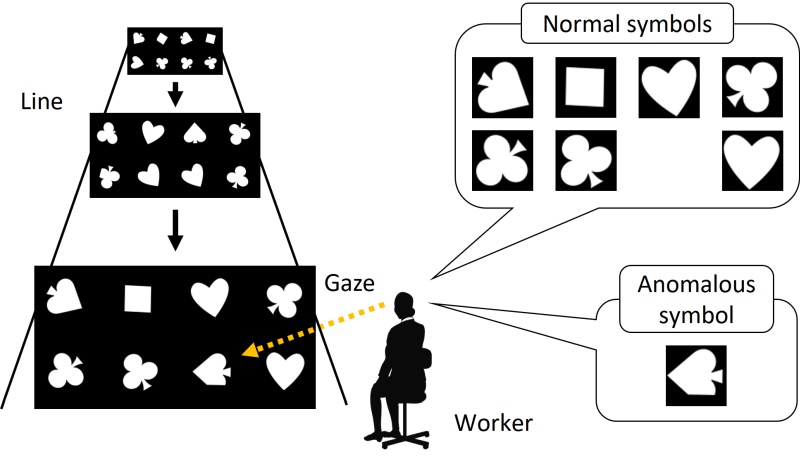

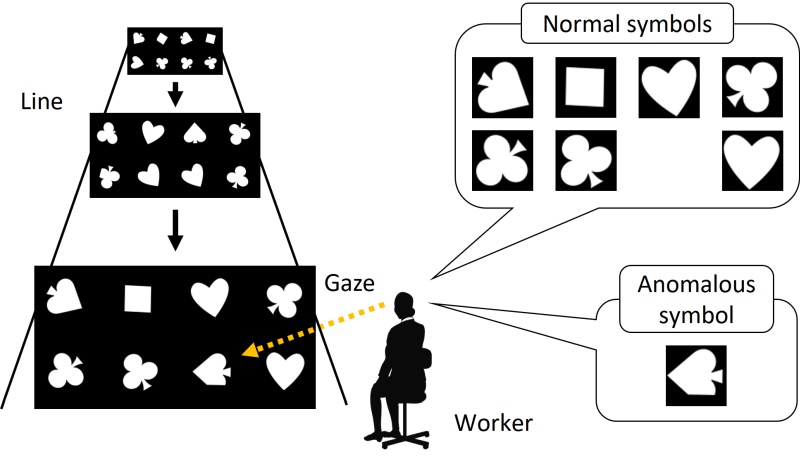

In this paper, we investigate the number of training samples required for deep learning techniques to achieve better accuracy of inspection than a human on a simple visual inspection task. We also examine whether there are differences in terms of finding anomalies when deep learning techniques outperform human subjects. To this end, we design a simple task that can be performed by non-experts. It required that participants distinguish between normal and anomalous symbols in images. We automatically generated a large number of training samples containing normal and anomalous symbols in the task. The results show that the deep learning techniques required several thousand training samples to detect the locations of the anomalous symbols and tens of thousands to divide these symbols into segments. We also confirmed that deep learning techniques have both advantages and disadvantages in the task of identifying anomalies compared with humans.

[Publication]

[Publication (Japanese) ]