Synthesizing Realistic Image-based Avatars by Body Sway Analysis

[Abstract]

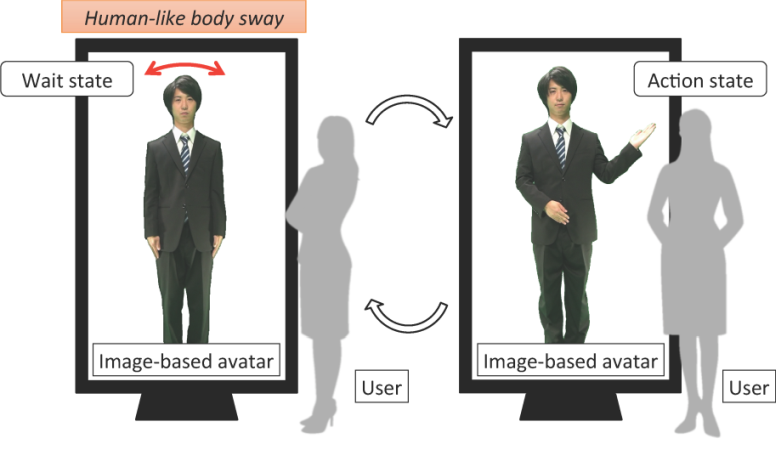

We propose a method for synthesizing body sway to give human-like movement to image-based avatars. This method is based on an analysis of body sway in real people. Existing methods mainly handle the action states of avatars without sufficiently considering the wait states that exist between them. The wait state is essential for filling the periods before and after interaction. Users require both wait and action states to naturally communicate with avatars in interactive systems. Our method measures temporal changes in the body sway motion of each body part of a standing subject using a single-camera video sequence. We are able to synthesize a new video sequence with body sway over an arbitrary length of time by randomly transitioning between points in the sequence when the motion is close to zero. The results of a subjective assessment show that avatars with body sway synthesized by our method appeared more alive to users than those using baseline methods.

[Publication]

[Publication (Japanese) ]

[Short movie]